How to handle corrupt data or badRecords in spark scala

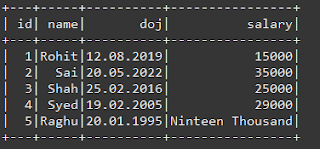

Input :

Output:

Solution:

//in this way you can corrupt data show as null

//Just Define Schema

schema = StructType(ArrayStructField("id",IntegerType(),True),StructField("name",StringType(),True),StructField("doj",DateType(),True),StructField("salary",IntegerType(),True)))

val df = spark.read

.format("csv")

.option ("header","true")

.schema("id Integer,name String,doj String,salary Integer")

.option("mode","permissive")//ignore corrupt data

.load("file:///D:/employeeinfo.csv")

df.show()

//if we want to badrecord store in some place and not show as null in table. we can use as

val df = spark.read

.format("csv")

.option ("header","true")

.schema("id Integer,name String,doj String,salary Integer")

.option("badRecordsPath","D:/tmp/")

.load("file:///D:/employeeinfo.csv")

df.show()

//if we dont want to show bad records

val df = spark.read

.format("csv")

.option ("header","true")

.schema("id Integer,name String,doj String,salary Integer")

.option("mode","dropmalformed")

.load("file:///D:/employeeinfo.csv")

df.show()

Comments

Post a Comment